Managing the century of complexity: Origins, evolution and productive future avenues with systems thinking

Abstract: Progress in human society's technological and social development has come at the cost of staggering complexity. Understanding this complexity's origin, evolution, and consequences is essential for human managers to lay the groundwork for reducing it in their work. Examples from the war in Afghanistan (2011-2021) and Pleistocene Park in northern Siberia support a recommended systems thinking framework and associated skills.

Context

Stephen Hawking famously stated that the 21st century would be the “century of complexity”7. Two decades in, and most of us would agree with this statement. Nevertheless, there is something inherently passive in merely acknowledging such issues, and I decided to embark on a journey to discover more productive efforts. Sure - the world is complex, but what can we do about it? This is the goal of the emerging field of progress studies.

This essay borrows heavily from terms from cybernetics and systems thinking - and philosophy requires some heavy definition setting to avoid ambiguity. Questions such as: "what is more complex - a cucumber or a car?" have one answer: it depends. It depends on the scale and our goals with the problem. In our case, we are interested in complex systems. I have covered the basic systemic definitions in the Elements of Data Strategy. Now let us focus on the goal of this article - understanding the evolution of complex systems over time and how we can influence them productively in the future.

We can visualize complexity to set the context for the rest of the discussion. There are many facets to this in the modern world, but I decided to use one easier to understand as a proxy for the overall complexity. Let us look at society’s growing complexity across three different historical eras in terms of increasing job specialization in Figure 1.

Here, we use a type of fractal (fractals are commonly used to describe how complexity can arise from seemingly simple initial conditions12) called the Dragon curve. What immediately catches our attention is how there were very few roles in prehistoric society - hunters, gatherers, and soldiers. Let us look at the complexity profile of this stage of human civilization. We can see that while the efficiency at this stage is low (measured in different ways, but most comprehensively in energy production and consumption by Vaclav Smil10), the risk that such a system carries is also low. For example, any hunter can readily be replaced by another human since those skills are relatively easy to teach. There is another way to look at the complexity of this system - look at the pattern as a supply chain - moving goods (or other energy equivalents) between different social groups. In the case of prehistoric society, the chain is relatively short, again leading to lower risk in case of disruptions. The second fractal represents agricultural society. Here we start to see the first roots of specialization, resulting in clusters of similar roles. A food production cluster appears, where we have new, more specialized roles such as tracker and herder. The risk profile of this stage is relatively similar, but with all the values higher.

Those two fractals contrast starkly with the state of our modern civilization. Here the amount of roles possible has increased by orders of magnitude. This has resulted in tremendous gains in efficiency since each separate role often requires years of training and experience - providing optimal execution and utilization of human and energy resources. A side effect of this is also extremely long and complex supply chains of goods and energy on a global scale. Society pays for this gain in efficiency by a correspondingly huge increase in systemic risk - for example, as observed during the breaking of supply chains from China at the start of the Covid-19 pandemic in 202011. There is also a dangerous positive feedback loop - the abundance of low-price products increases their usage and demand - further driving production up (for example, cheap LED lights or streaming services).

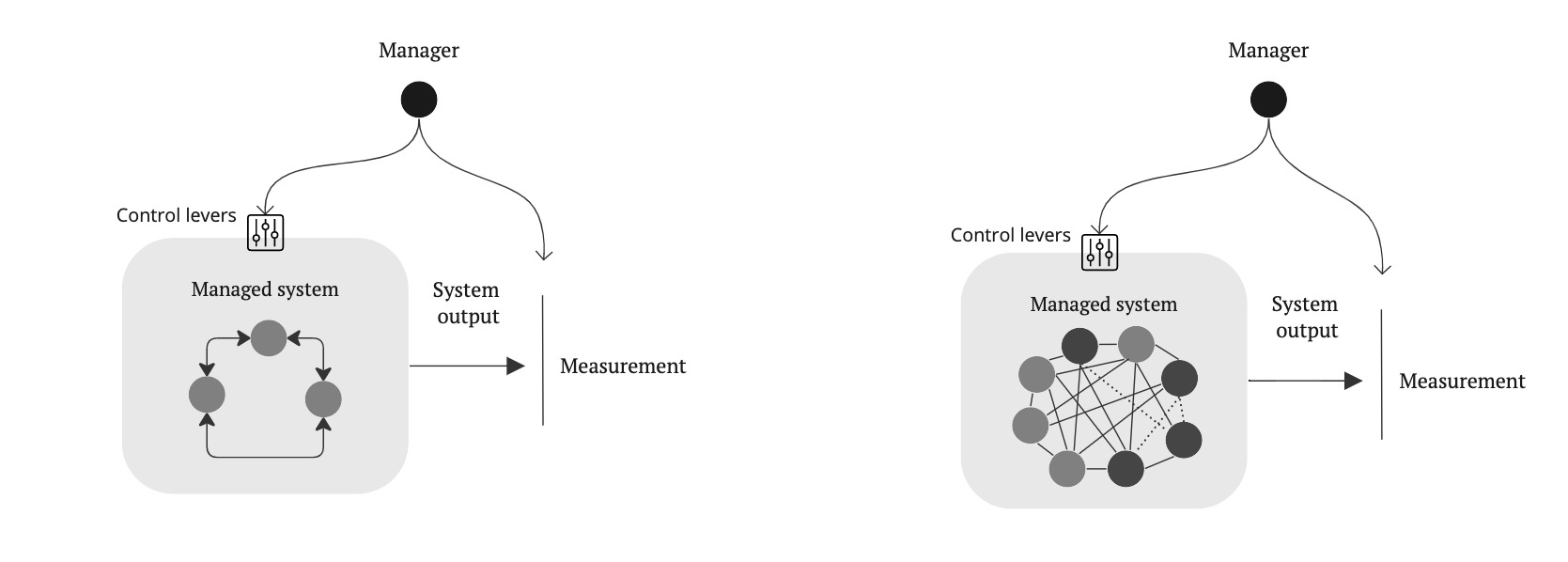

We see how evolving complexity results in risk. Still, why can’t we effectively manage this? We can illustrate the issue by introducing the role of a human manager - the person responsible for steering the complex system in a new direction. Let us contrast two systems - a simple and a complex one (for example, the prehistoric society and the modern one) in Figure 2.

The first pre-requisite for our thought experiment is that the manager has a goal. This goal is to influence the system under management in a certain direction. To do this, they require two devices. First, control levers with which they can influence the system. Second, measurement sensors, which can monitor the results of their actions - determine the direction of the systems' output (ine interesting tangential idea here is that a human manager is only necessary when there are inefficiencies in a system. A truly optimized system should run like an automated factory).

For simplicity's sake, our proxy metric for system complexity will be the number of interactions between the system members (between the hunters, soldiers, and gatherers, for example). In a system with three members, the count is

interactions. It is safe to say that a human manager can ensure the smooth operation for six daily interactions - so no problem here. What happens if we have eight members? The average number of members of a prehistoric tribe is closer to 200. However, even with eight, we have a combinatorial explosion in our hands:

interactions! Just with eight people, it becomes apparent that for a human manager it would be impossible to control all interactions perfectly. This is Ashby’s Law of Requisite Variety4 at play. Moreover, such a system also tends to exhibit emergent properties hidden in a smaller group. For example, some members of the group can start to form a clique and communicate in a different way between themselves. This shows yet another problem for the manager to solve - one also impossible to plan for in advance.

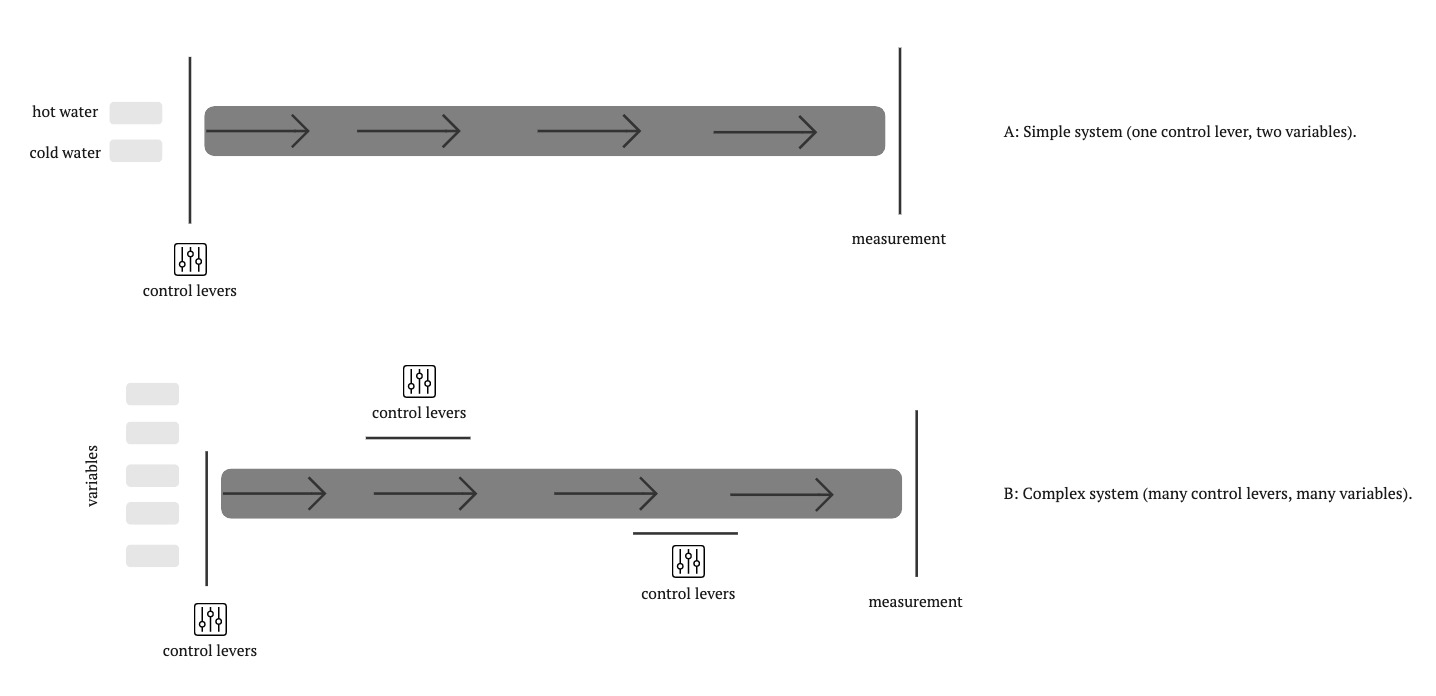

Let us next look at the control levers of a manager and discover a new set of issues there. Here is an analogy from one everyday activity - taking a shower: System A on Figure 3. We have hot and cold water as variables, and our goal is that the water is the right temperature for us at the end of the flow. We can already see (but also know from experience) how the delay between the levers and measurement can result in difficulty managing the system. It becomes increasingly hard to adjust the controls to ensure the right temperature.

Any other real-world situation is often more complex than this analogy. System B should remind us of another temperature issue we want to regulate - climate change. In this case, we not only have massive delays between cause and effect, but we have many more contributing factors, together with the various interactions between them - some of them still unknown. Those can also operate in different parts of the cycles (and geographic areas) - influencing our measurement. For a system like that, how are we supposed to know what control levers (we have more of those, too) produce what effects, and how to ensure we take appropriate action to steer the system in the right direction? This cascading system of stocks, flows, and variables8 is an apotheosis of a black box complex system.

Case studies

Negative example: Afghanistan War

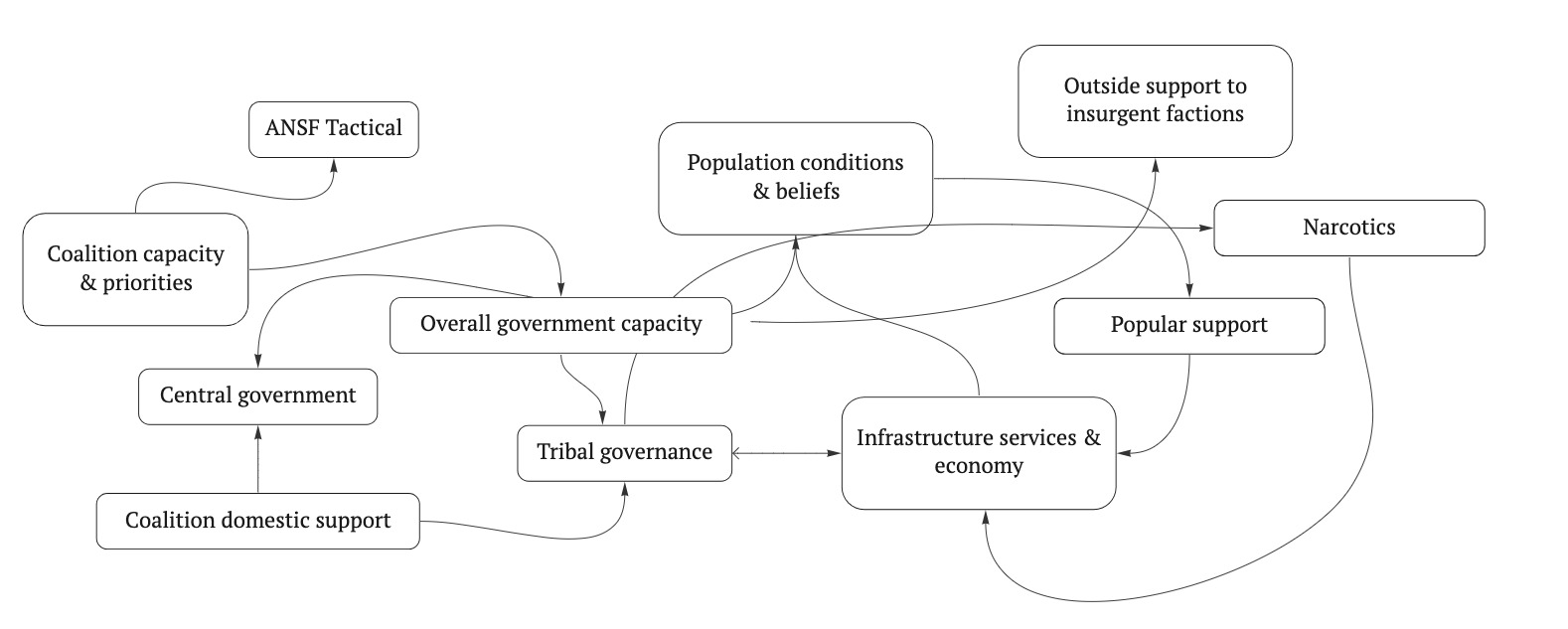

Unfortunately, there are many negative examples where human management has failed to influence complex systems successfully. For our purposes, I decided to select one of the most well-known and devastating examples - the war in Afghanistan between 2001 and 2021. This country has been named "the graveyard of empires"5 - for a good reason. Many great powers have tried to capture and control this land, primarily due to its strategic geographic position in the Middle East. Despite overwhelming superiority in resources and training, all those forces ultimately failed in their overall strategic objectives.

We can argue that the main reason for those failures is the complexity of the system that the external forces wanted to influence - together with conflicting goals and lack of information measurement9. This is best illustrated in a diagram from a strategy slide deck called “Dynamic Planning for COIN in Afghanistan” (COIN stands for counterinsurgency)\footnote{The diagram is presented in this article in the New Yorker.}. On Figure 4, I provide a simplified version of the slide without most of the sub-level elements and their interactions.

Not only did all those different systems need to be understood, but also their often unpredictable interactions. This is a classic example of drowning in complexity while more straightforward strategies are available, for example, controlling the main Afghanistan ring road1 (an example of how simple heuristics are sometimes superior to a centralized, rigid strategy).

Positive example: Pleistocene Park

Paleoecologists discovered that the region’s ecosystem around the end of the last ice age had much higher biodiversity and complexity than today13. Back then, large predators and grazers roamed the land, prowling mixed forests and grasslands - contrasting with the largely desolate landscape of shrubs and mosses in the tundra today. One of the primary explanations behind this is the appearance of humans in those freed from ice areas and the subsequent extinction of large animals - needed as an energy source for a growing human population. This, in turn, resulted in much lower biodiversity of the system - for example, the wooly mammoths disappearing resulted in a lack of turnover of fallen trees to enrich the soil (currently also observed for African elephants in their habitat) - in turn rendering the soil less able to support complex flora13.

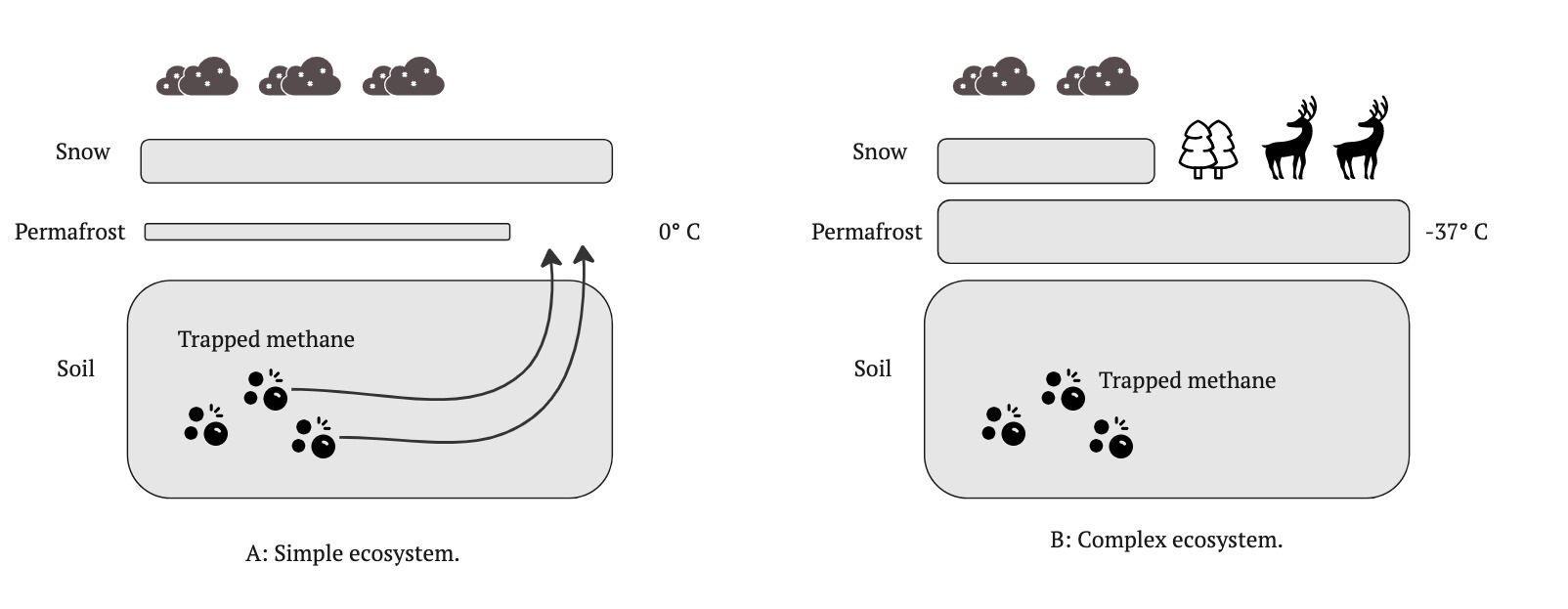

The scientists decided it would be an interesting experiment to attempt to re-engineer the old ecosystem by reintroducing large fauna species. They discovered another goal of such an experiment - reducing the effects of climate change6. The more complex ecological system would also protect the permafrost layer in the soil - a vital barrier shielding the atmosphere from vast amounts of the greenhouse gas methane that could be released on exposure. Figure 5 shows how a simple and complex ecosystem affects this.

The experiment proved that the large fauna species, especially grazers, ensured a much less thick layer of insulating snow above the permafrost. This resulted in much colder soil, lasting much longer in the winter - ensuring that methane remains trapped beneath the surface.

Productive patterns

I will use those two examples to show how a systems thinking process, and associated skillset (developed by Arnold and Wade2 3) provide a framework for successful action on complex systems.

Identifying systems

- Case 1: This part was done correctly in Afghanistan, but it took some time. Only in the later military engagements was the US army able to compile an exhaustive list of all components and their interactions.

- Case 2: The scientists were able to successfully identify the boundaries of all the different systems - the flora, fauna, atmosphere, snow, permafrost, and soil.

- Essential skills: Use mental modeling and abstraction, recognize systems, maintain boundaries

Understanding systems

- Case 1: This is the real battlefield where failures started to accumulate. The effects of a lack of understanding of the complex insurgency network also became apparent much later, rendering any corrections obsolete at the time of action.

- Case 2: While a perfect understanding of a complex ecosystem is almost impossible, the researchers focused on controlled experiments and measurements to ensure they understood the systems under management.

- Essential skills: Explore multiple perspectives, differentiate and quantify elements, and identify and characterize relationships.

Predicting system behavior

- Case 1: This is the most visible way the objectives of the military operation failed - the lack of ability to predict how the system will behave frustrated many of the actions attempted.

- Case 2: By isolating the components and being cognizant of past behavior and feedback loops, the scientists could predict the system behavior to a reasonable extent. What was also impressive of the scientists is that they realized that this complex system is bound to have just one effect that needs measurement - they could both aim to increase biological diversity and mitigate climate change detrimental effects.

- Essential skills: dentify and characterize feedback loops, understand what happens when content and structure interact, describe past system behavior.

Devising modifications to systems to produce desired effects

- Case 1: The lack of understanding of system behavior and inability to predict it in the future made any modifications counterproductive - even if they appeared at first glance to be in the right course of action.

- Case 2: The excellent groundwork in systems thinking ensured that any remaining complexity was correctly managed and the goals of the Pleistocene Park accomplished.

- Essential skills: Effectively respond to uncertainty and ambiguity, respond to changes over time, use leverage points to produce effects.

Conclusion

The complexity around us is bound to only increase in the future. While it is helpful to characterize the issues we face, it is time to start working productively on them. There are frameworks available, but more importantly, the new generation of human managers has to be taught the fundamental system skills - equipping them with the tools needed to reduce complexity and direct the systems under our management to a favorable outcome for us as a civilization - and our planet.

References

-

Amiri, M. A. (2013). Road reconstruction in post-conflict afghanistan: Acure or a curse? International Affairs Review XXI, (2). ↩

-

Arnold, R. D. and Wade, J. P. (2015). A definition of systems thinking: A systems approach. Procedia computer science, 44:669–678. ↩

-

Arnold, R. D. and Wade, J. P. (2017). A complete set of systems thinking skills. Insight, 20(3):9–17. ↩

-

Ashby, W. R. (1991). Requisite variety and its implications for the control of complex systems. In Facets of systems science, pages 405–417. Springer. ↩

-

Fergusson, J. and Hughes, R. G. (2019). ‘graveyard of empires’: geopolitics, war and the tragedy of afghanistan. Intelligence and National Security, 34(7):1070–1084. ↩

-

Fischer, W., Thomas, C., Zimov, N., and G¨ockede, M. (2022). Grazing enhances carbon cycling, but reduces methane emission in the siberian pleistocene park tundra site. Biogeosciences, 19(6):1611–1633. ↩

-

Hawking, S. and Dolby, T. (2000). What is complexity? The Washington Center for Complexity http://www. complexsys.org/downloads/whatiscomplexity. pdf. ↩

-

Meadows, D. H. (2008). Thinking in systems: A primer. chelsea green publishing. ↩

-

Ritchie, M. (2021). War misguidance: Visualizing quagmire in the us war in afghanistan. Media, War & Conflict, page 1750635220985272. ↩

-

Smil, V. (2018). Energy and civilization: a history. MIT Press. ↩

-

Wang, Y., Wang, J., and Wang, X. (2020). Covid-19, supply chain disruption and china’s hog market: a dynamic analysis. China Agricultural Economic Review. ↩

-

Wolfram, S. and Gad-el Hak, M. (2003). A new kind of science. Appl. Mech. Rev., 56(2):B18–B19. ↩

-

Zimov, S. A. (2005). Pleistocene park: return of the mammoth’s ecosystem. Science, 308(5723):796–798 ↩↩